Crawlab is a powerful Web Crawler Management Platform (WCMP) that can run web crawlers and spiders developed in various programming languages including Python, Go, Node.js, Java, C# as well as frameworks including Scrapy, Colly, Selenium, Puppeteer. It is used for running, managing and monitoring web crawlers, particularly in production environment where traceability, scalability and stability are the major factors considered. In this step by step guide I will show you how to install Crawlab on your Synology NAS using Docker & Portainer.

STEP 1

Please Support My work by Making a Donation.

STEP 2

Install Portainer using my step by step guide. If you already have Portainer installed on your Synology NAS, skip this STEP. Attention: Make sure you have installed the latest Portainer version.

STEP 3

Make sure you have a synology.me Wildcard Certificate. Follow my guide to get a Wildcard Certificate. If you already have a synology.me Wildcard certificate, skip this STEP.

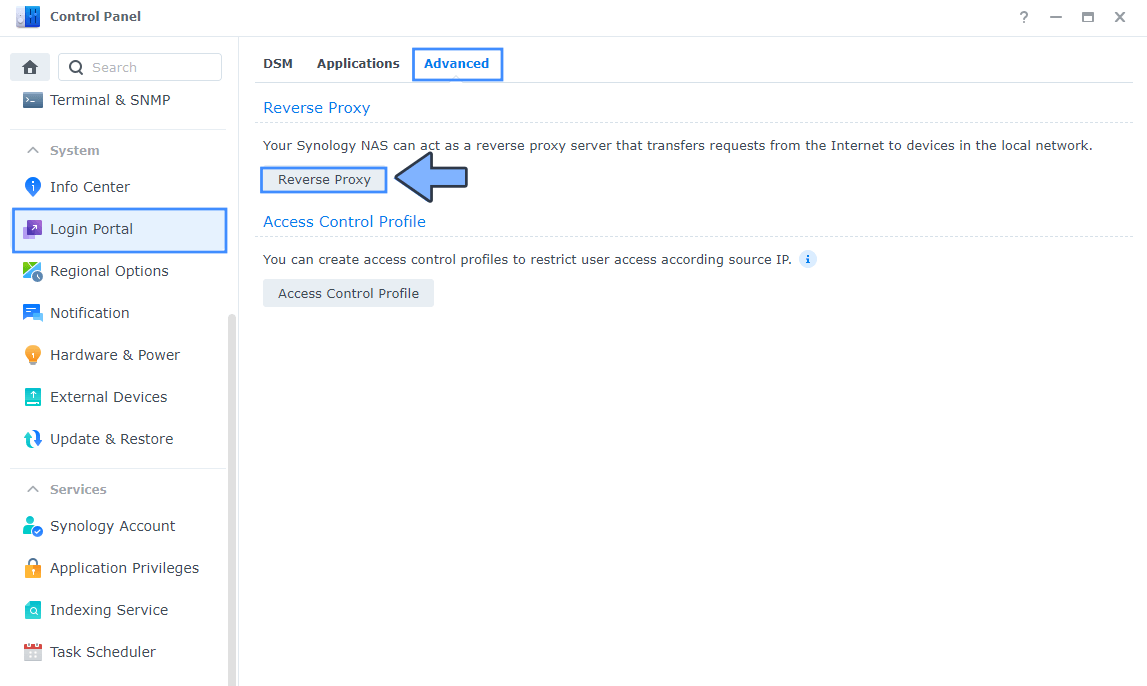

STEP 4

Go to Control Panel / Login Portal / Advanced Tab / click Reverse Proxy. Follow the instructions in the image below.

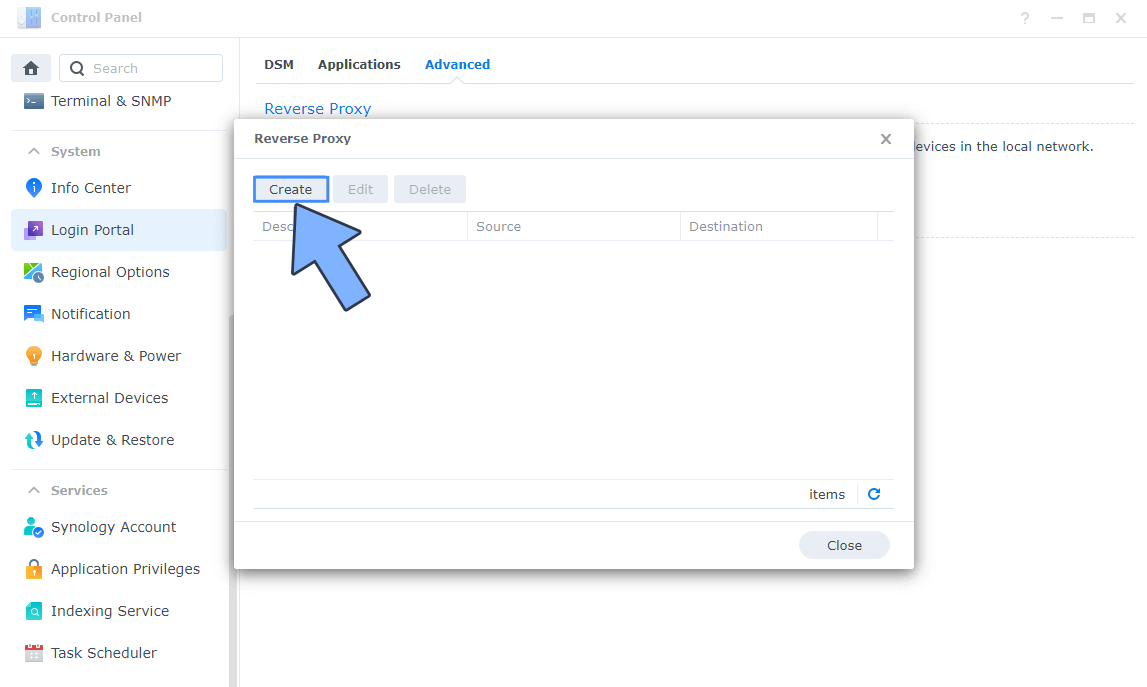

STEP 5

Now click the “Create” button. Follow the instructions in the image below.

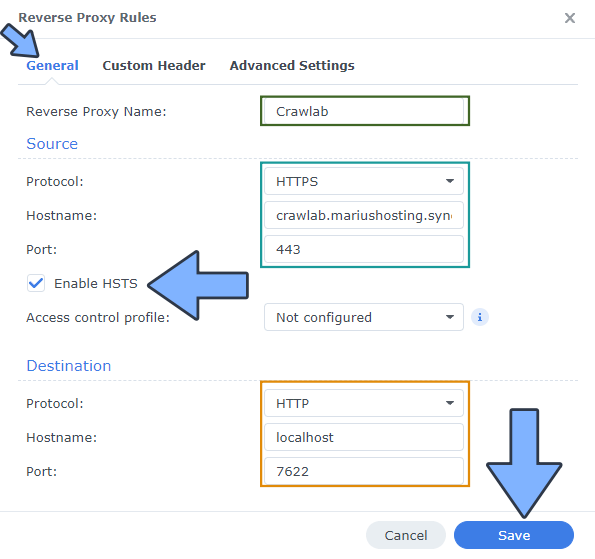

STEP 6

After you click the Create button, the window below will open. Follow the instructions in the image below.

On the General area, set the Reverse Proxy Name description: type in Crawlab. After that, add the following instructions:

Source:

Protocol: HTTPS

Hostname: crawlab.yourname.synology.me

Port: 443

Check Enable HSTS

Destination:

Protocol: HTTP

Hostname: localhost

Port: 7622

STEP 7

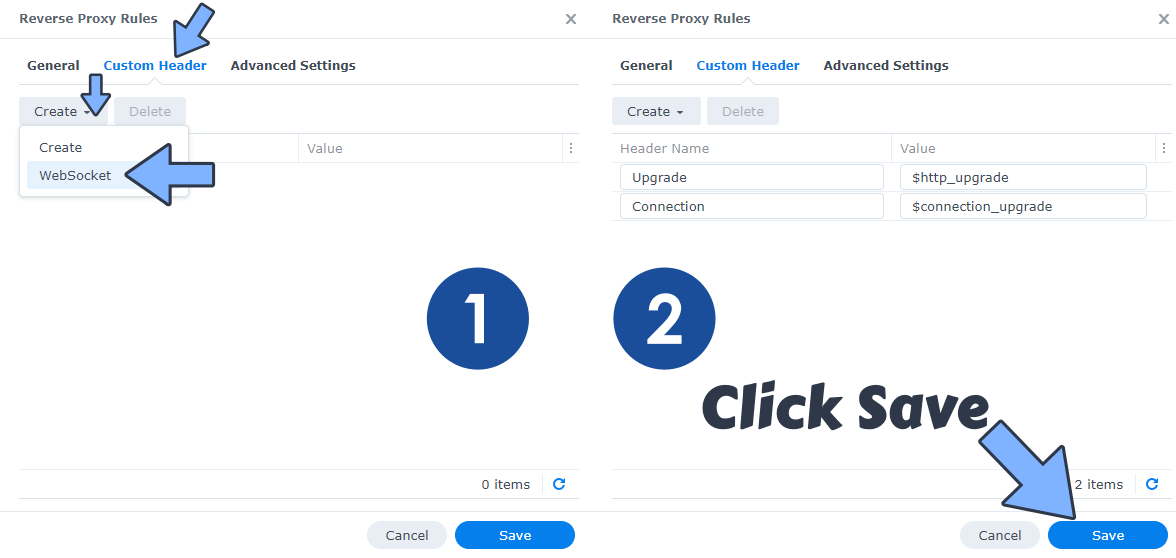

On the Reverse Proxy Rules, click the Custom Header tab. Click Create and then, from the drop-down menu, click WebSocket. After you click on WebSocket, two Header Names and two Values will be automatically added. Click Save. Follow the instructions in the image below.

STEP 8

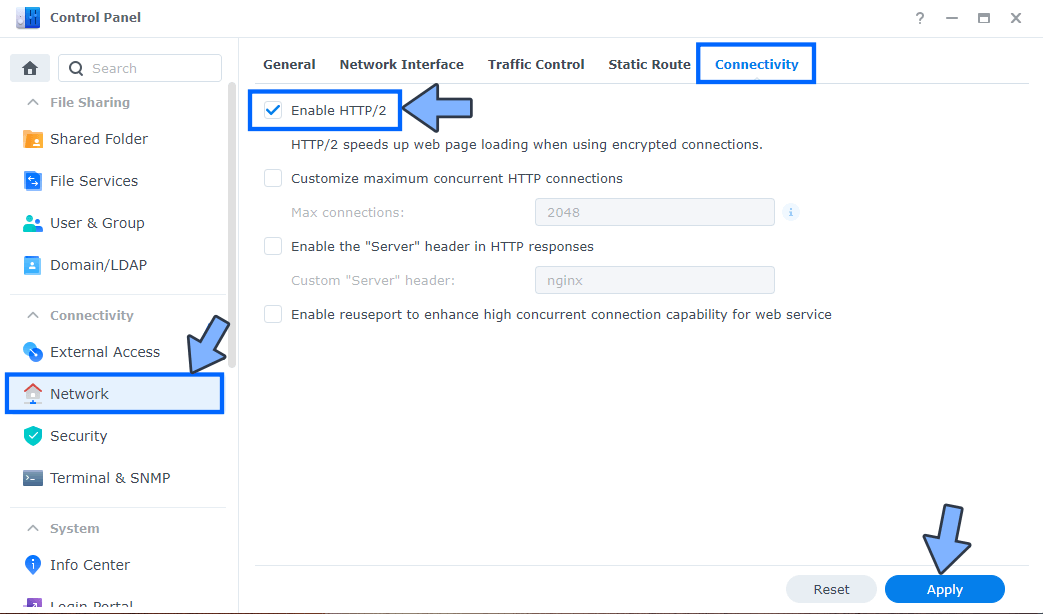

Go to Control Panel / Network / Connectivity tab/ Check Enable HTTP/2 then click Apply. Follow the instructions in the image below.

STEP 9

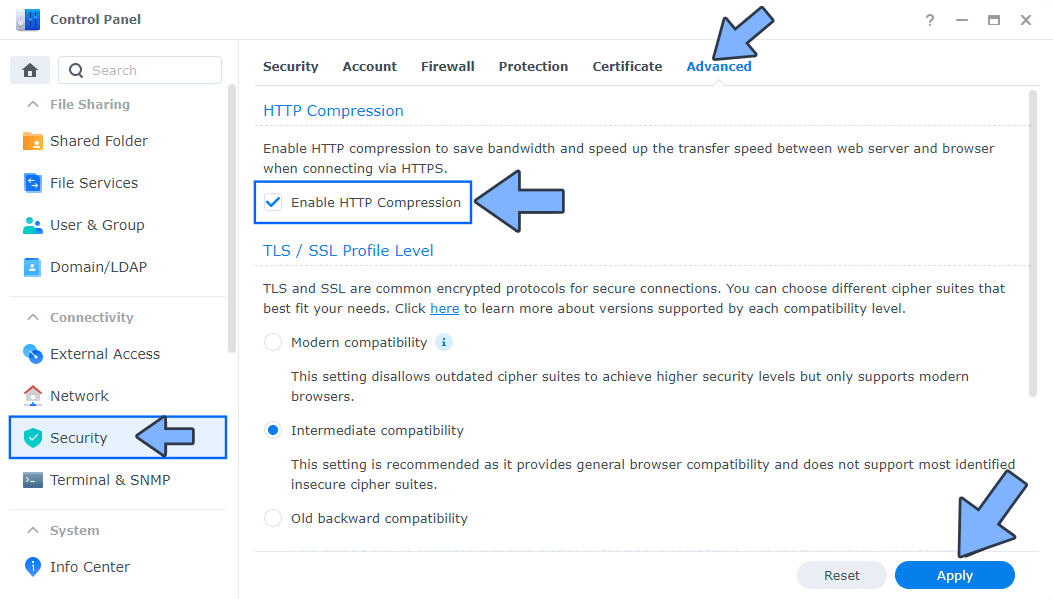

Go to Control Panel / Security / Advanced tab/ Check Enable HTTP Compression then click Apply. Follow the instructions in the image below.

STEP 10

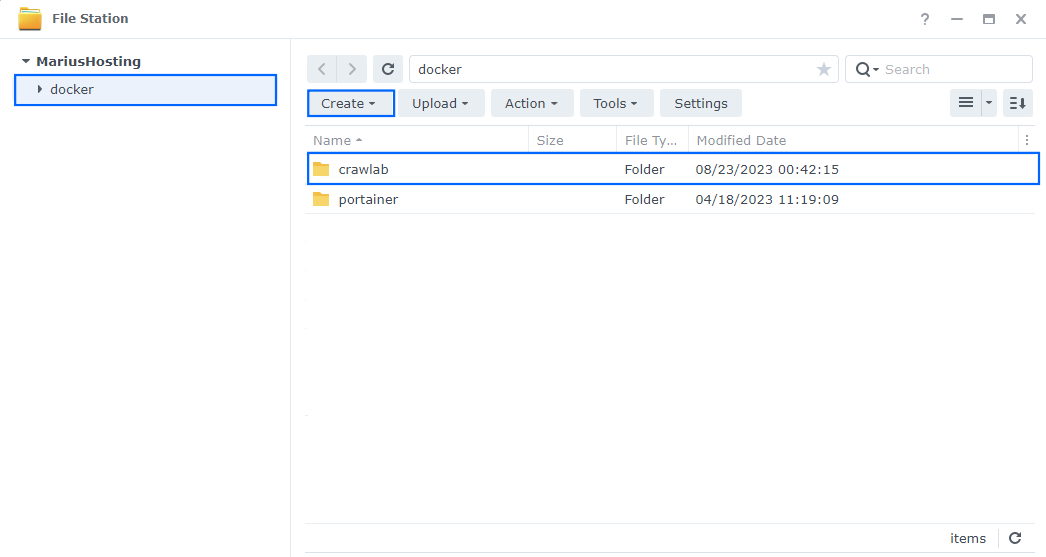

Go to File Station and open the docker folder. Inside the docker folder, create one new folder and name it crawlab. Follow the instructions in the image below.

Note: Be careful to enter only lowercase, not uppercase letters.

STEP 11

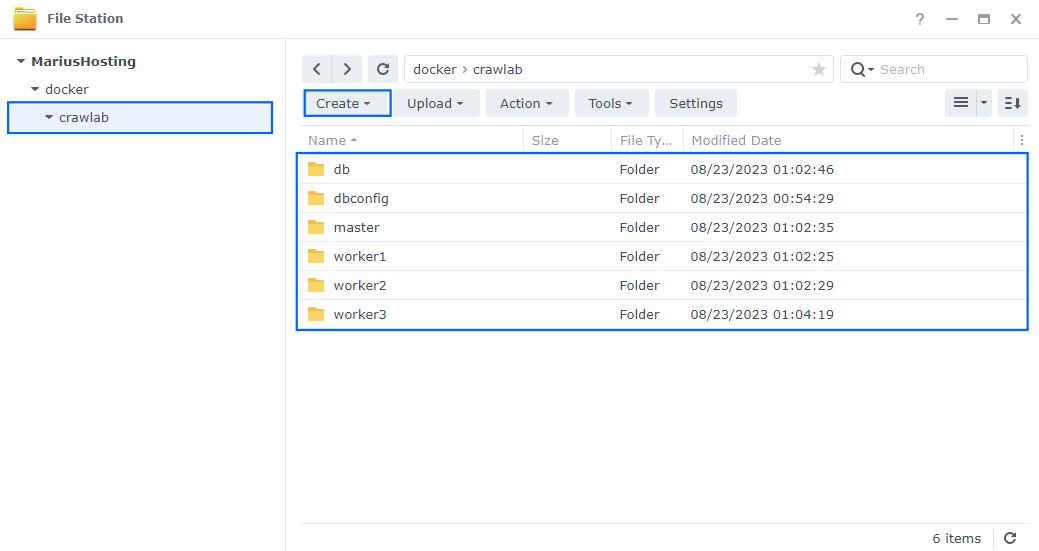

Now create six folders inside the crawlab folder that you created at STEP 10 and name them db, dbconfig, master, worker1, worker2, worker3. Follow the instructions in the image below.

Note: Be careful to enter only lowercase, not uppercase letters.

STEP 12

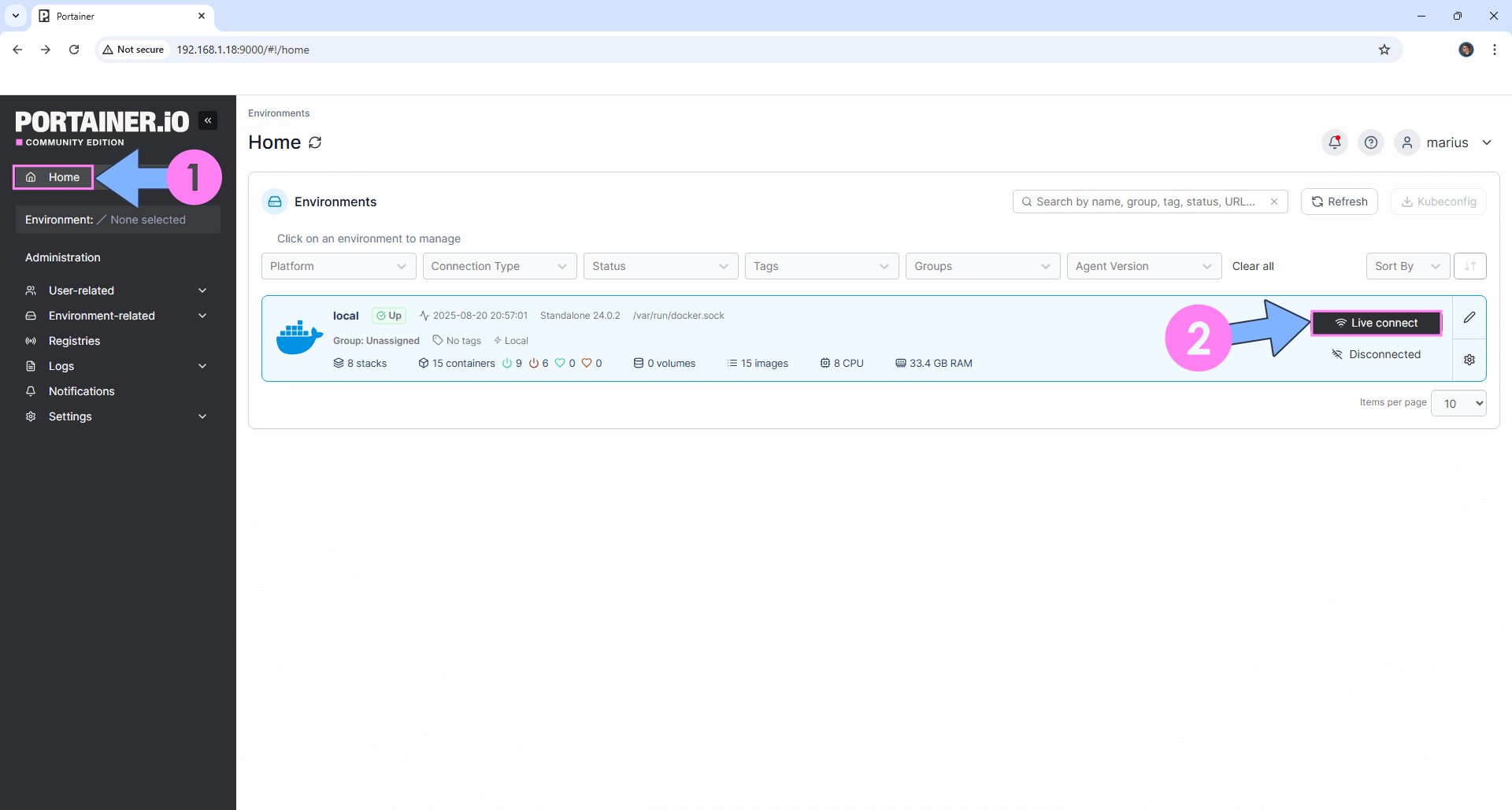

Log into Portainer using your username and password. On the left sidebar in Portainer, click on Home then Live connect. Follow the instructions in the image below.

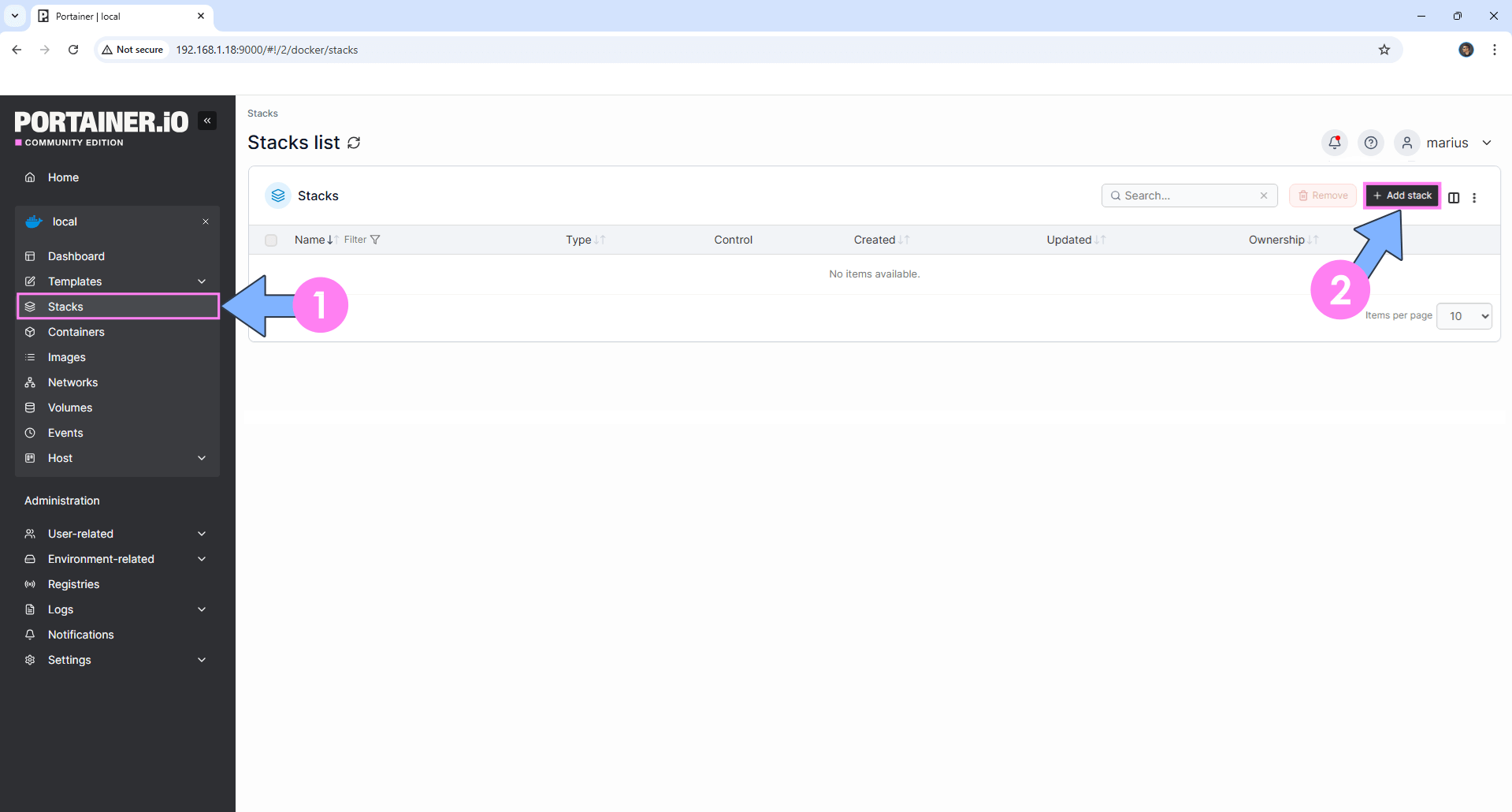

On the left sidebar in Portainer, click on Stacks then + Add stack. Follow the instructions in the image below.

STEP 13

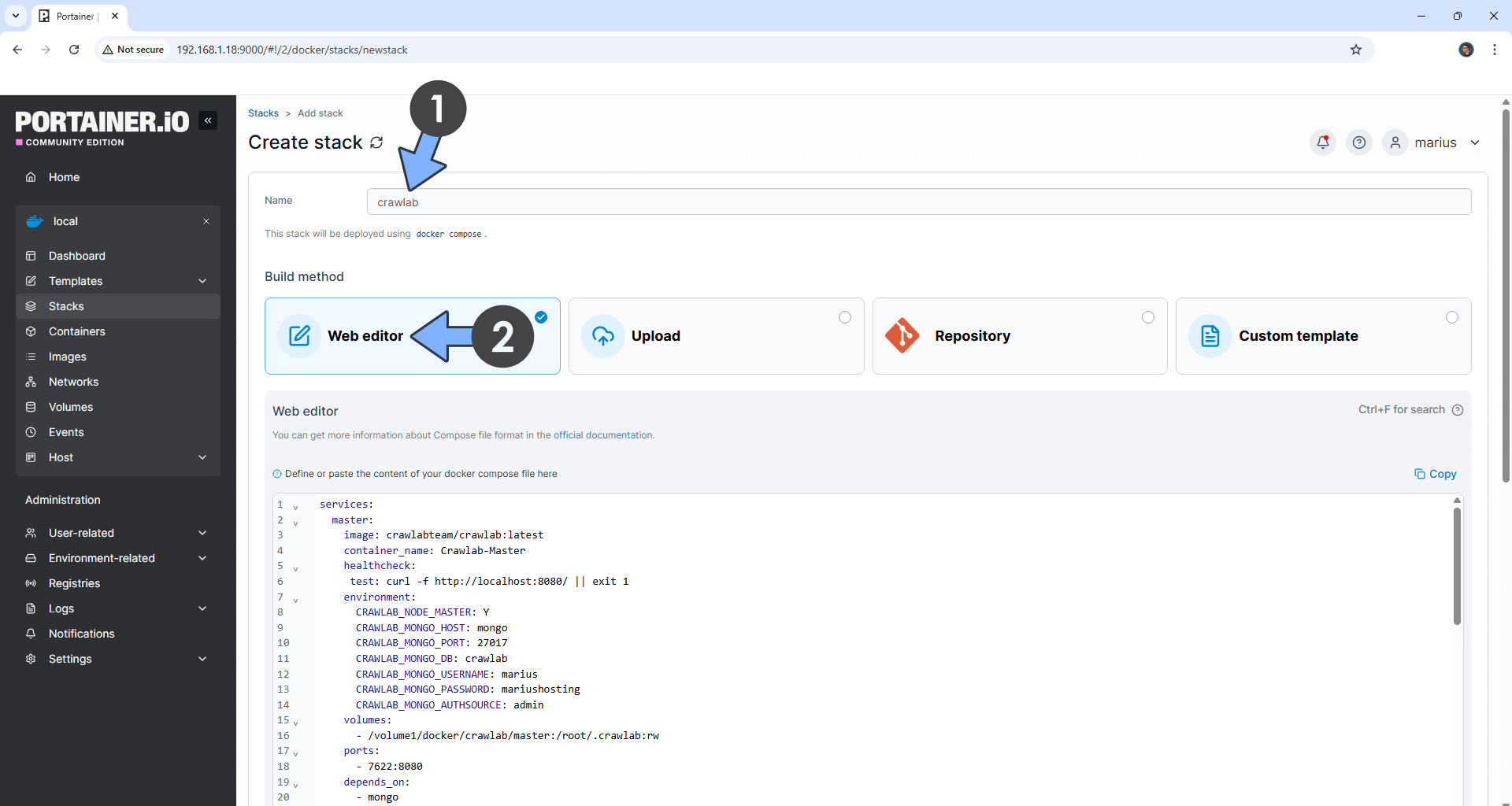

In the Name field type in crawlab. Follow the instructions in the image below.

services:

master:

image: crawlabteam/crawlab:latest

container_name: Crawlab-Master

healthcheck:

test: curl -f http://localhost:8080/ || exit 1

environment:

CRAWLAB_NODE_MASTER: Y

CRAWLAB_MONGO_HOST: mongo

CRAWLAB_MONGO_PORT: 27017

CRAWLAB_MONGO_DB: crawlab

CRAWLAB_MONGO_USERNAME: marius

CRAWLAB_MONGO_PASSWORD: mariushosting

CRAWLAB_MONGO_AUTHSOURCE: admin

volumes:

- /volume1/docker/crawlab/master:/root/.crawlab:rw

ports:

- 7622:8080

depends_on:

- mongo

restart: on-failure:5

worker01:

image: crawlabteam/crawlab:latest

container_name: Crawlab-Worker1

environment:

CRAWLAB_NODE_MASTER: N

CRAWLAB_GRPC_ADDRESS: master

CRAWLAB_FS_FILER_URL: http://master:8080/api/filer

volumes:

- /volume1/docker/crawlab/worker1:/root/.crawlab:rw

depends_on:

- master

restart: on-failure:5

worker02:

image: crawlabteam/crawlab:latest

container_name: Crawlab-Worker2

environment:

CRAWLAB_NODE_MASTER: N

CRAWLAB_GRPC_ADDRESS: master

CRAWLAB_FS_FILER_URL: http://master:8080/api/filer

volumes:

- /volume1/docker/crawlab/worker2:/root/.crawlab:rw

depends_on:

- master

restart: on-failure:5

worker03:

image: crawlabteam/crawlab:latest

container_name: Crawlab-Worker3

environment:

CRAWLAB_NODE_MASTER: N

CRAWLAB_GRPC_ADDRESS: master

CRAWLAB_FS_FILER_URL: http://master:8080/api/filer

volumes:

- /volume1/docker/crawlab/worker3:/root/.crawlab:rw

depends_on:

- master

restart: on-failure:5

mongo:

image: mongo:4.4

container_name: Crawlab-DB

environment:

MONGO_INITDB_ROOT_USERNAME: marius

MONGO_INITDB_ROOT_PASSWORD: mariushosting

volumes:

- /volume1/docker/crawlab:/root/.crawlab:rw

- /volume1/docker/crawlab/db:/data/db:rw

- /volume1/docker/crawlab/dbconfig:/data/configdb:rw

ports:

- 27017:27017

healthcheck:

test: echo 'db.stats().ok' | mongo localhost:27017/test --quiet

interval: 10s

timeout: 10s

retries: 5

restart: on-failure:5

Note: Before you paste the code above in the Web editor area below, change the values for CRAWLAB_MONGO_USERNAME and MONGO_INITDB_ROOT_USERNAME and type in your own username. marius is an example for a username. Both values should be the same.

Note: Before you paste the code above in the Web editor area below, change the values for CRAWLAB_MONGO_PASSWORD and MONGO_INITDB_ROOT_PASSWORD and type in your own password. mariushosting is an example for a password. Both values should be the same.

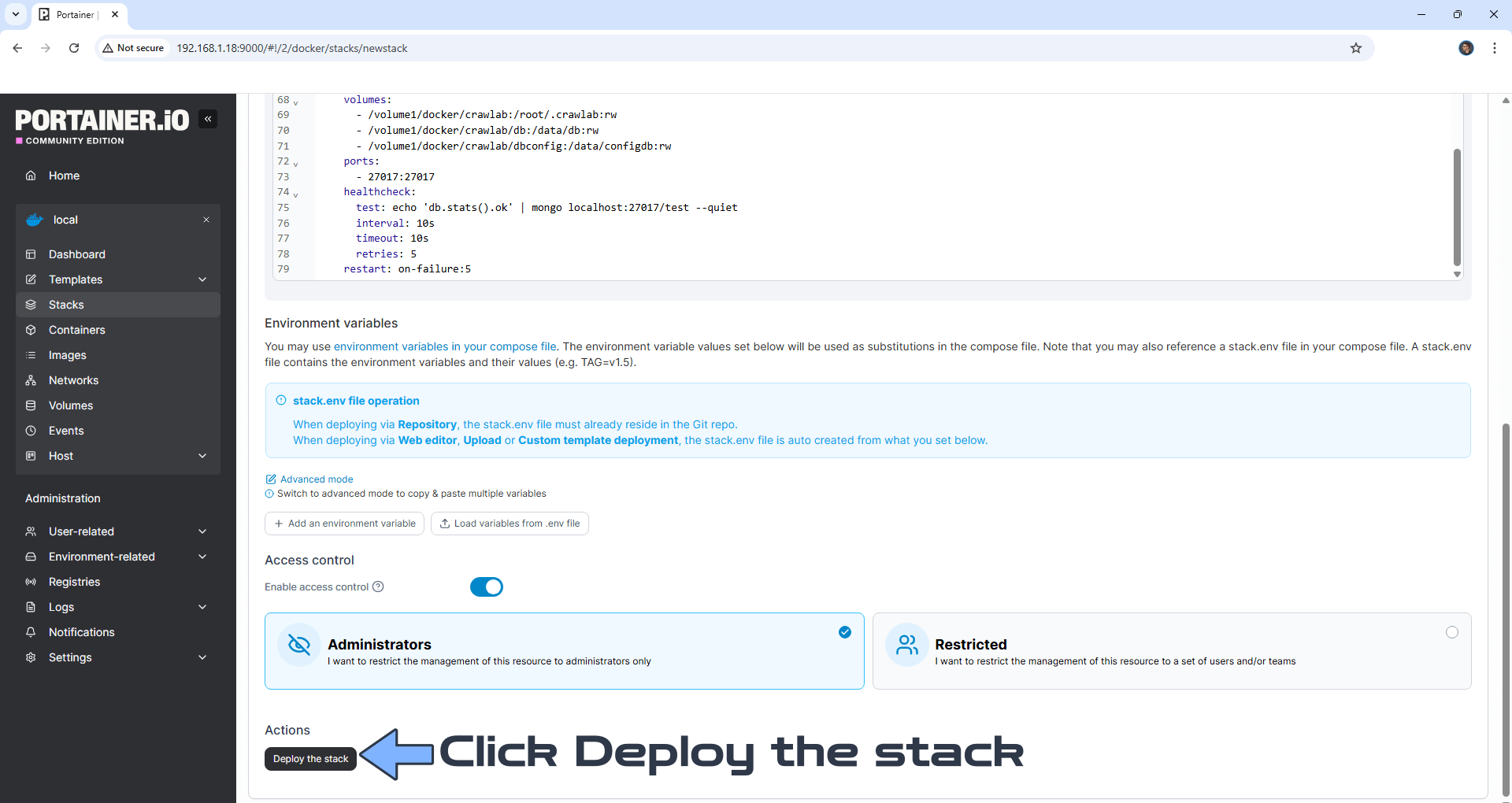

STEP 14

Scroll down on the page until you see a button named Deploy the stack. Click on it. Follow the instructions in the image below. The installation process can take up to a few minutes. It will depend on your Internet speed connection.

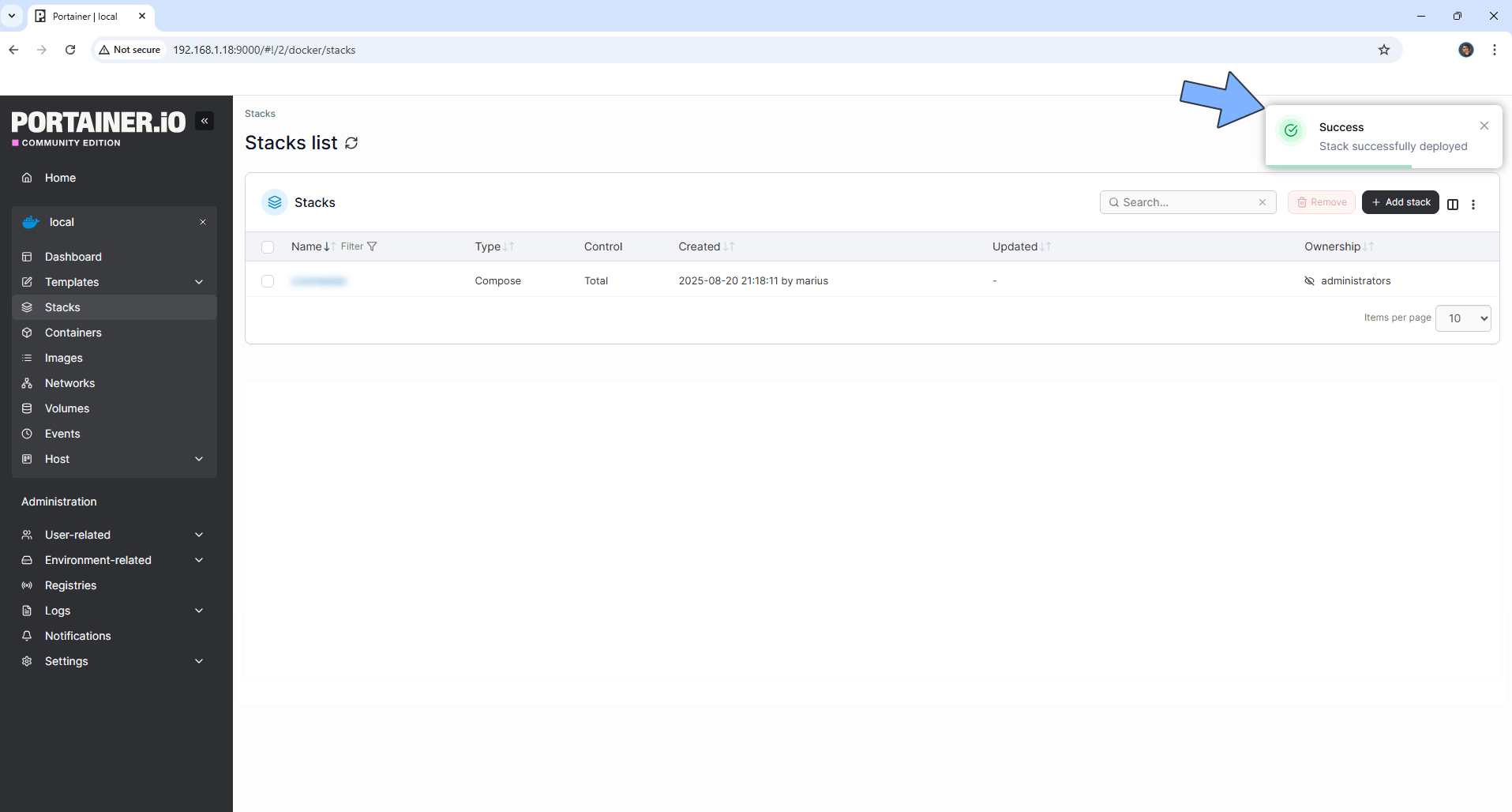

STEP 15

If everything goes right, you will see the following message at the top right of your screen: “Success Stack successfully deployed“.

STEP 16

🟢Please Support My work by Making a Donation. Almost 99,9% of the people that install something using my guides forget to support my work, or just ignore STEP 1. I’ve been very honest about this aspect of my work since the beginning: I don’t run any ADS, I don’t require subscriptions, paid or otherwise, I don’t collect IPs, emails, and I don’t have any referral links from Amazon or other merchants. I also don’t have any POP-UPs or COOKIES. I have repeatedly been told over the years how much I have contributed to the community. It’s something I love doing and have been honest about my passion since the beginning. But I also Need The Community to Support me Back to be able to continue doing this work.

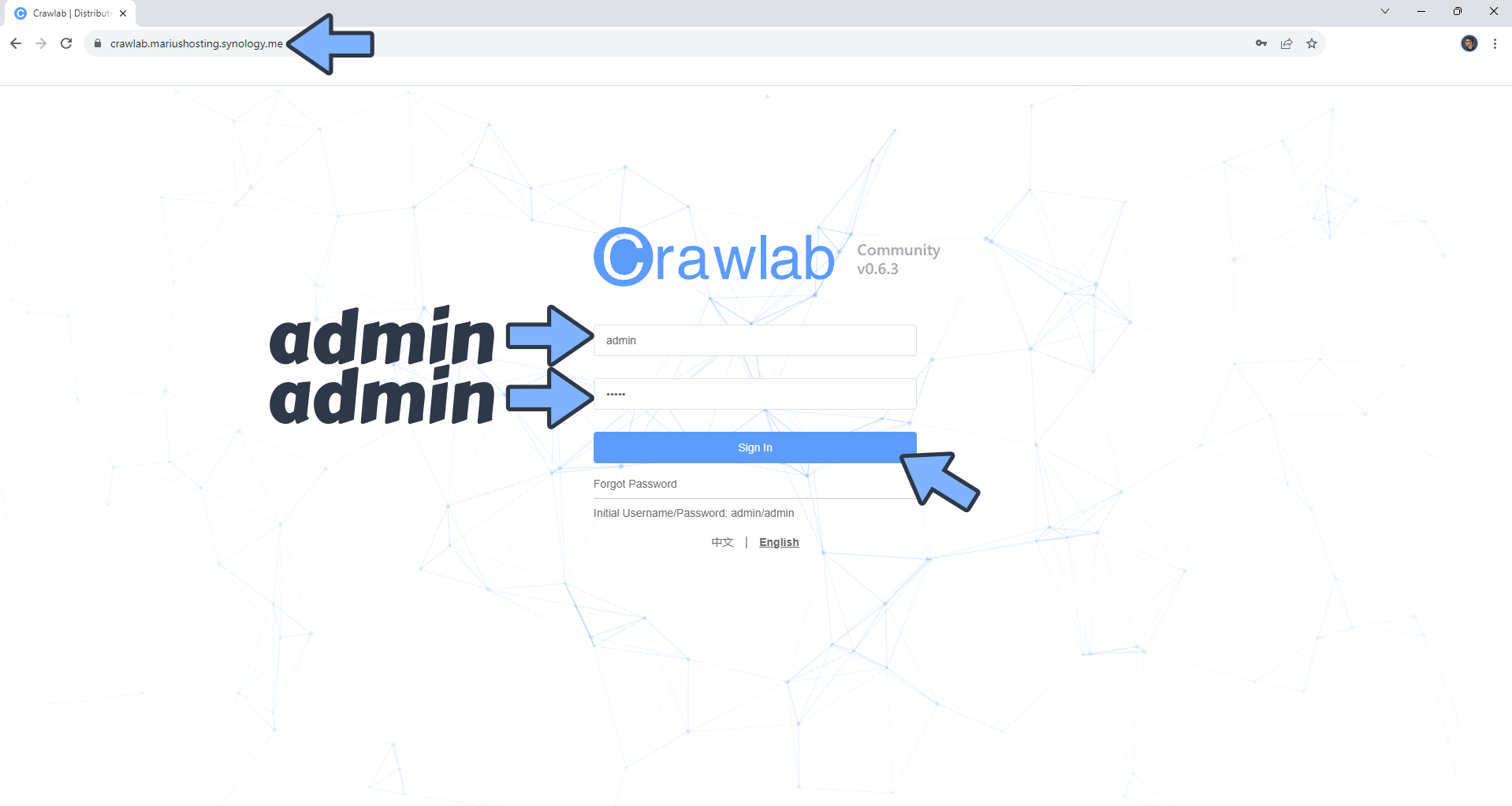

STEP 17

Now open your browser and type in your HTTPS/SSL certificate like this https://crawlab.yourname.synology.me In my case it’s https://crawlab.mariushosting.synology.me Type in the default username and password then click Sign in. Follow the instructions in the image below.

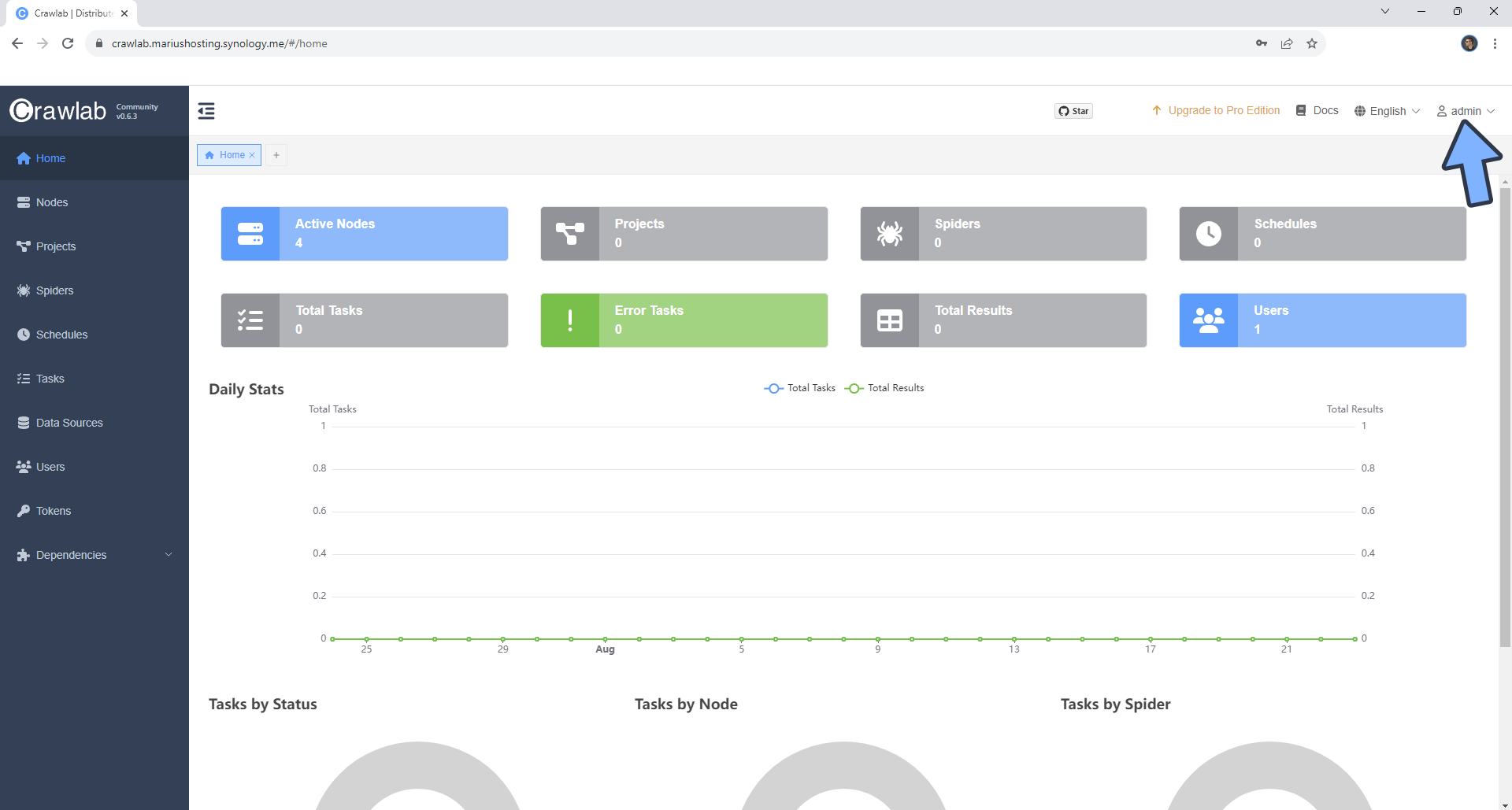

STEP 18

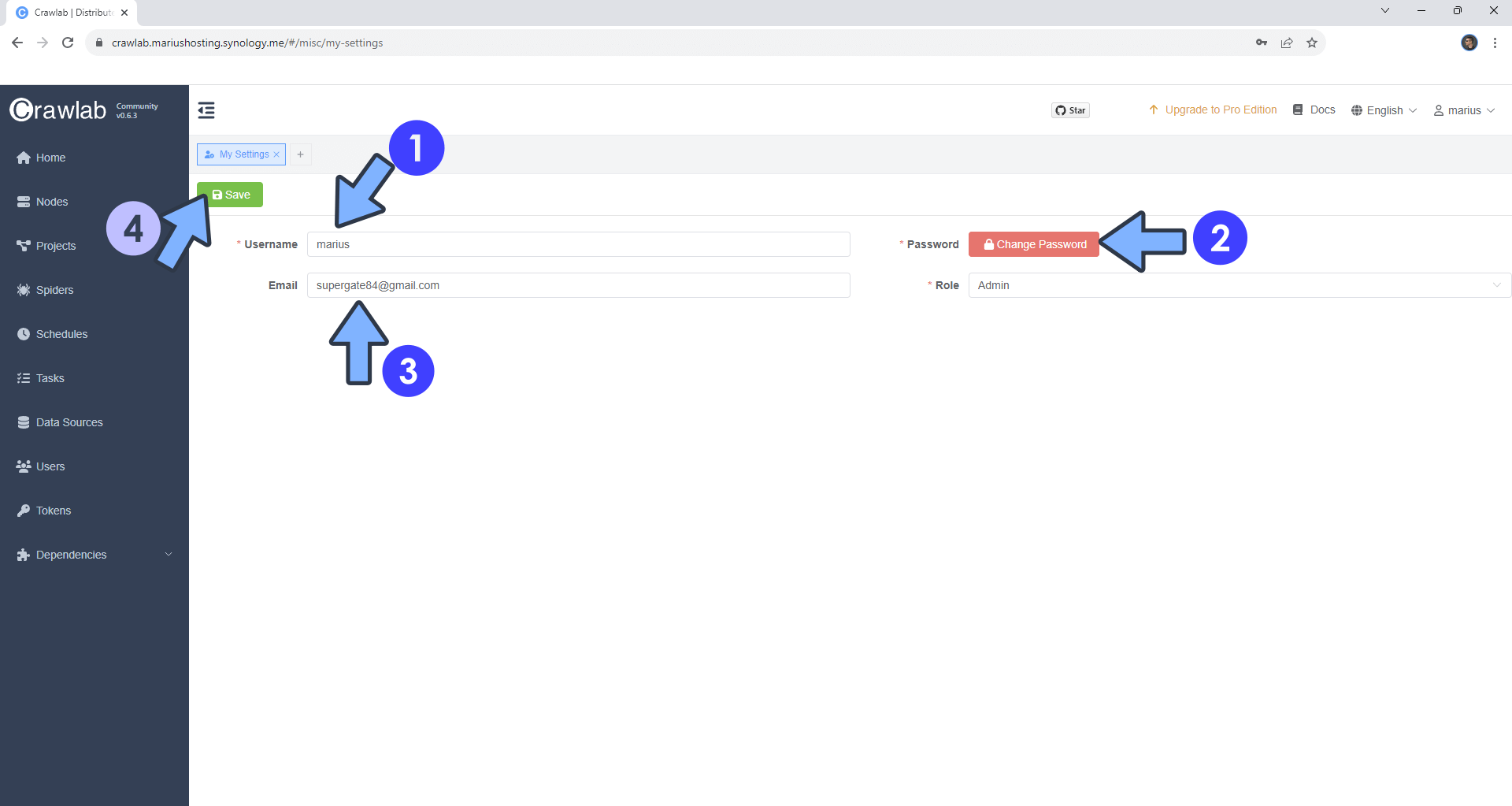

Your Crawlab dashboard at a glance! At the top right of the page click admin then settings to change the default admin username and password. Follow the instructions in the image below.

STEP 19

Add your own Username, Password and Email, then click Save. Follow the instructions in the image below.

STEP 20

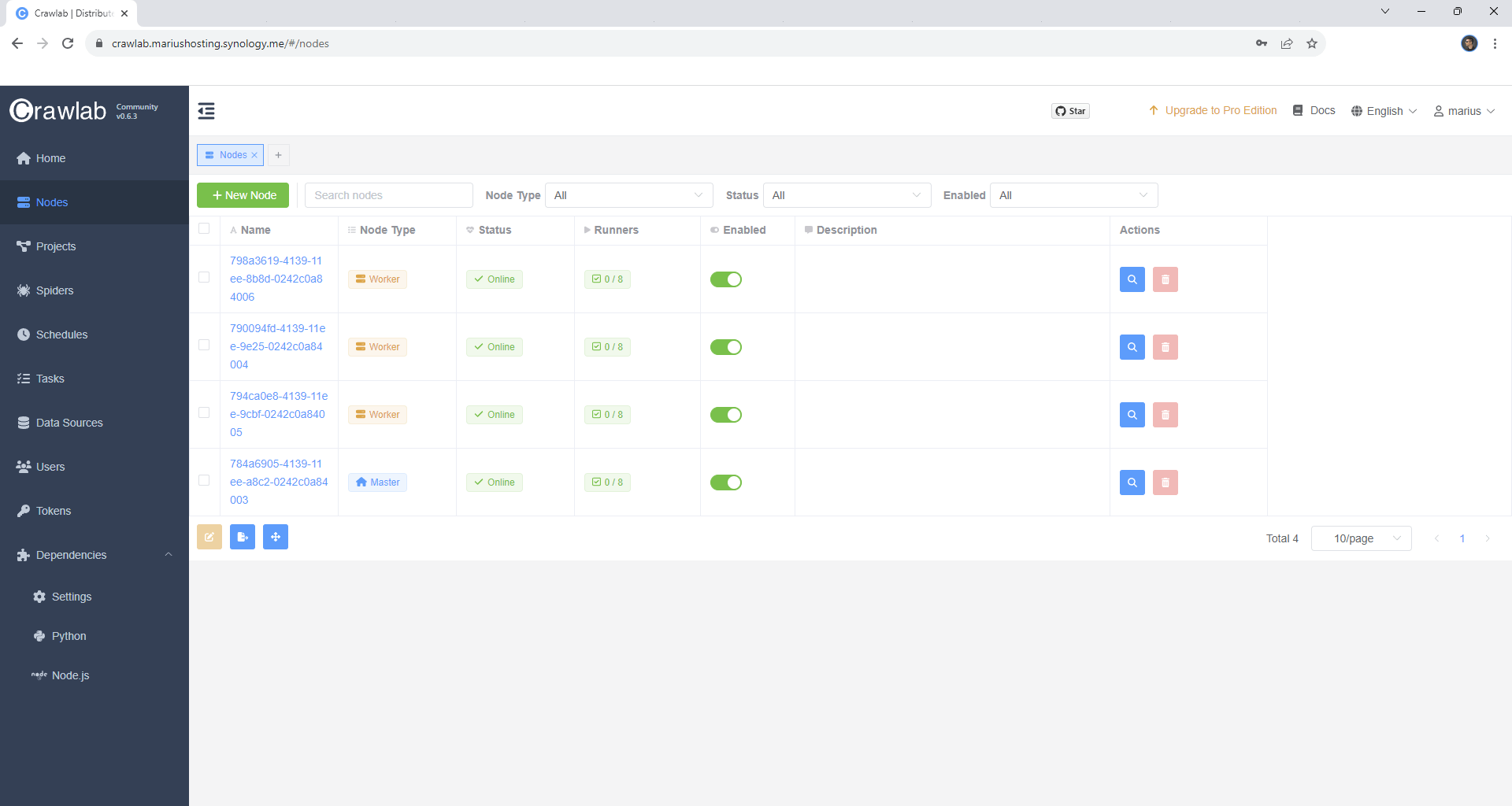

Your Crawlab nodes at a glance!

Enjoy Crawlab!

If you encounter issues by using this container, make sure to check out the Common Docker issues article.

Note: Can I run Docker on my Synology NAS? See the supported models.

Note: How to Back Up Docker Containers on your Synology NAS.

Note: Find out how to update the Crawlab container with the latest image.

Note: How to Free Disk Space on Your NAS if You Run Docker.

Note: How to Schedule Start & Stop For Docker Containers.

Note: How to Activate Email Notifications.

Note: How to Add Access Control Profile on Your NAS.

Note: How to Change Docker Containers Restart Policy.

Note: How to Use Docker Containers With VPN.

Note: Convert Docker Run Into Docker Compose.

Note: How to Clean Docker.

Note: How to Clean Docker Automatically.

Note: Best Practices When Using Docker and DDNS.

Note: Some Docker Containers Need WebSocket.

Note: Find out the Best NAS Models For Docker.

Note: Activate Gmail SMTP For Docker Containers.

This post was updated on Wednesday / February 4th, 2026 at 11:04 PM